NAVODAYA STUDIO

LIBSSM

Large Imaging with BeamSplitter Sensor Matrices

(conceptual design & illustration. 2 August 2018)

Jijo. 2 August 2010

The author is a filmmaker with accomplished technological innovations

in the disciplines of cinema and mass entertainment.

This paper is archived in viXra

http://viXra.org/abs/1808.0632

reference: 10101301

timestamp: 2018-08-30 02:17:43

viXra citation number: 1808.0632

latest version: v1

subject category: Classical Physics

Title: Large Imaging with Beam Splitter Sensor Matrices

Authors: Jijo Punnoose

Abstract: Extending the use of beam-splitter in stereo camera imaging, the author - a 3D filmmaker,

is proposing how segments of multiple images could be captured by optical sensor matrices and then, combined to create very large high-resolution images.

18 Pages.

A beam splitter is an optical device that splits a beam of light in two.

Schematic illustration of a beam splitter cube.

1 - Incident light

2 - 50% Transmitted light

3 - 50% Reflected light

In practice, the reflective layer absorbs some light.

Another way is to use a half-silvered mirror

(dichroic optical coating on optically clear glass plate).

https://en.wikipedia.org/wiki/Beam_splitter

In motion picture cinematography at least, with individual lenses you cannot capture segments of one large subject and then optically/ digitally combine them into a single image sequence. Because, in their optical qualities - lens aberration for instance, no two lenses are identical.

The major shortcoming of the CINERAMA process (1952) was not that multiple projectors couldn't be aligned to project segments of an image seamlessly ... but that, separate shooting lenses could never be aligned to take in segments of a subject seamlessly.

That answers the question - Why Beamsplitters?

Today, Digital Cinema Projectors can be aligned pixel-to-pixel, seamlessly.

But not shooting lenses.

Hence, outlined here is a concept for splitting a Very Large Image - with beamsplitters, and using array of sensors to capture segments of that image onto computers.

Arraying of sensors adjacent to each other doesn’t help. For, when placed close together, the sensors don't join seamlessly. Such arraying is possible for digital photography of images in astronomy. For cinema ... well, we have an example in CINERAMA.

As shown, four quadrants taken with 4 lenses do not join seamlessly (simulated, image courtesy film La La Land).

Again, array of CMOS when placed side by side, do not join seamlessly either. (Hence the need for alternate images for adjacent sensors).

First, let us see how 2 identical images can be created with one beamsplitter. primary image

Schematic Illustrations by Narayana Moorthy, Navodaya (image courtesy film La La Land)

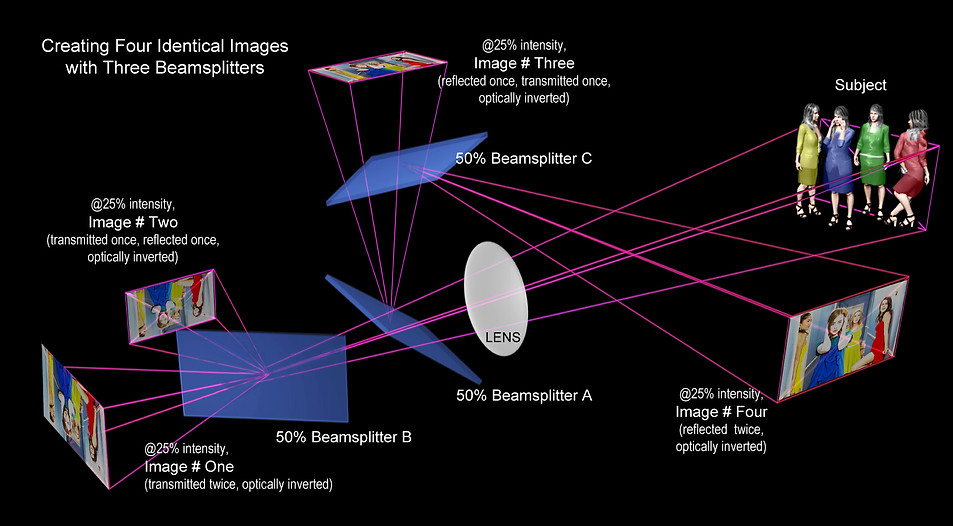

Now, let us see how 4 identical images can be created with 3 beamsplitters. primary image

Schematic Illustrations by Narayana Moorthy, Navodaya (image courtesy film La La Land)

Once you have four identical images, you can divide them into 4 segments with

(A) 2 X 2 matrix.

Or a maximum of 16 segments with

(B) 4 X 4 matrix.

HOW TO CREATE A 2 X 2 matrix.

For instance, consider Sensors a, b, c & d as Alexa 65mm.

Each of Size 54.12mm X 25.58mm. Resolution 6560 X 3108

In effect, Alexa 65mm Sensor X 4 numbers (2 X 2 matrix)

each of Size : 54.12mm x 25.58mm. Resolution 6560 x 3108

When stitched together, the image would be about

3 times the resolution of 65mm (5 perf.) film image.

Which would make this about the same as Imax (15 perf.) film.

And about 7.5 times the resolution of 35mm (full aperture) film.

2 X 2

To add dynamism to this large image, recording the images in 3D with a High Frame Rate (60fps) architecture should also be considered.

For such a scenario, it may require coupled drives with banks of servers sharing a master clock.

HOW TO CREATE A 4 X 4 matrix.

Now consider Sensors - 4 numbers of A, 4 numbers of B, 4 numbers of C and 4 numbers of D

- a total of 16, as RED Dragon (6K) 35 mm.

Each of Size 30.72mm X 5.8mm. Resolution 6144 X 3160.

In effect, a 4 X 4 matrix - 16 RED Dragon (6K) 35 mm.

Each of Size : 30.72 x 15.8mm. Resolution : 6144 x 3160

When stitched together, the image would have 16 times the resolution of 35mm (full aperture) film image.

In comparison, an Imax (15 perf.) film image has 7.5 times the resolution of 35mm.

And, a 65mm (5 perf.) film image has 2.5 times the resolution of 35mm.

4 X 4

This Large Digital Imaging could find use in different applications.

Once the images from the matrix have been stitched into a single image sequence, the play-out could be in any format. HD, Digital Cinema for Theaters ... even special venues of Mammoth Screen sizes where segments of the image are seamlessly played from multiple Digital Cinema Projectors.

That should make this a hit with Themed VR Rides.

Jijo.

Navodaya Studio, Kakkanad, Kochi. 2 August 2018

AFTERWORD for LIBSSM

Senthil went ahead to develop the Qube Cinema Projection system﹣which changed not only theatrical exhibition - but eventually filmmaking itself, when RED Cameras replaced ARRI cameras to become the workhorse of the industry. Meanwhile, with the team at Kishkinta Amusement park, I on my part developed a Digital 3D System (2004) for shooting and exhibiting 3D snippets of 10 minutes durations at Kishkinta.

We used to say that if my brother Jose had waited, he could have become the first digital 3D feature filmmaker … a honor that went to James Cameron when he made Avatar (2010). Now, before I come to what we fell short in achieving our ambitions in 2004 (which, with this article, I intent to accomplish now) I should mention for Digital 3D Imaging why I opted for the 'beam splitter'.

Beamsplitter is as old as the earliest 18th century optical device for measuring the speed of light. And this principle was used in making the first 3D films of the 1950s. The very same beamsplitter has today (in 2018) yet again become the film industry standard for shooting state-of-the-art live 3D productions.

Yet, seeing a bright future in taking Chris's ingenious 3D imaging technique right into the emerging digital era, I asked Chris Condon whether he could change the design for a smaller image plane? … re-center the top/bottom images for the sensor area?

In answer, the once celebrated optical engineer of Hollywood came out with a tirade against everything video and digital. He still swore by film chemistry. Alas I realized, yet again yesterday's harbinger has become today's retarder! I was half expecting this. For, during the previous few years when we had done S3D Imaging with computer graphics (3DMax, Maya & Autodesk Inferno softwares), Chris was almost dismissive about the efforts. When we developed a computer reticule to measure Z-axis ﹣i.e; a template to enumerate spatial separation between objects in a given stereo image pair, John Rupkalvis ﹣ who had designed the 3D camera viewfinder reticule, was enthusiastic. But John was vary of Chris Condon, his mentor.

My solution was to use two digital cameras on a beamsplitter rig.

The same method used during 3D's advent in 1950s. One sensor for each eye would provide double the resolution.

The implications?

Let me list the pros and cons.

In the 1950s, the main problems they had encountered for such a 'paired-image capturing' were as below.

1. The dual-camera bulk.

But today, void of mechanical components, digital cameras are way smaller than film cameras.

Yet, there are surely two advantages in using a Paired-Lens Stereovision Architecture.

(1) Theoretically speaking, Stereovision lenses can go onto any camera ﹣ film, digital or video.

(2) By using Stereovision lenses, no bulky rig or special dual-camera support systems such as crane, jib, camera car, etc. need be resorted to.

Hence Senthil and Balaji pitched for Stereovision architecture. While Jayendra, Jainul and myself opted for the two advantages (mentioned below) in using dual-camera. And, the majority won.

1) The image quality for digital was undergoing quantum leaps. That meant, the quality of lenses and the number of lens brands were proliferating. With Chris having stopped hand-grinding lenses at his Burbank shop, the quality of Stereovision lenses was of no match to the brilliance of Zeiss, Cooke or Arri lenses. So, such professional lenses - used in pairs - would offer much better quality images than the Stereovision dual lens.

(2) A 3D rig is cumbersome, agreed. But on a 3D rig, the introcular distance between the eyes can be varied. It is a great boon for stereography - especially when CG and greenscreen matting are considered. And today, with camera and lens metadata, all variables can be tracked and controlled.

Only one additional rule has to be included (along with traditional 3D cinematography principles) when pairing digital cameras for 3D imaging. The paired cameras have to be gen-locked. They should be slaved to a single source - preferably a master external clock. Also, if the screening happens to be with dual servers and dual projectors, it is to be ascertained that the left/right image pairs are always in absolute synch. For that, the digital servers should share the same clock and the digital projectors should be gen-locked. (This practice of additional image stability goes back to the pin-registration on film cameras and sometimes even - as done by Disney in their park exhibitions, pin-registering the film projectors!)

This is about how while working on Digital 3D, Large Imaging with BeamSplitter Matrices was arrived at.

[Instead of a rambling preamble, better a rambling postamble - I thought. Jijo]

The thought process ﹣ (1) Large Image Format, (2) High Frame Rate and (3) High Dynamic Range﹣all of them for Digital 3D Imaging﹣started developing in my mind on that day in August 2001 when my colleague Senthil Kumar of Real Image suggested that my brother Jose should make his proposed 3D film - Magic Magic (2003), in Digital Cinema format. But, it would have taken at least one more year to see a DCP encoding process which Senthil was developing. Since Jose couldn't wait, my brother went ahead to shoot the film in 35mm cinematographic format with Arriflex cameras and Stereovision lenses. And his film Magic Magic (2003) was released with film prints during the summer of 2003. The procedure was exactly as we had followed over the previous 2 decades ﹣ making and exhibiting 3D films with the infrastructure we had developed for My Dear Kuttichathan (1994).

Over-Under 3D image.JPG

From Wikipedia, the free encyclopedia

An "over-under" 3D frame. Both left and right eye images are contained within the normal height of a single 2D frame.

PLAN VIEW

Two Cameras with Beamsplitter.

Left Camera shooting through the glass plate.

Right Camera shooting the reflected image.

2. The reflected (right eye) image had to be optically flopped in a film lab printer.

But with computers today, an image flop can be done live!

And today, if you mount the beamsplitter glass plate vertical with the right eye camera on top and inverted, then your image has corrected itself. What more? … even the rig assembly's footprint becomes smaller.

3. Keeping the two film strips from slipping. Preventing the image pair from getting mis-aligned was a matter of grave concern.

None of this is of problem today. For, throughout the entire process chain, digital images are tagged and handled by the computer.

Just as the Dual Film Strips ran on locked Cameras, the Projectors were Interlocked.

This locked projection system was there till recently - for the 3D projection of IMAX.

Since the projection of Stereovision single-strip 3D film is done by converging the top/bottom - lefteye/righteye images onto the silver screen with a split/ prism lens in front of the projector, there was no question of image pairs slipping - as seen above in the projection of My Dear Kuttichathan 3D (1984).

Now, even if the dual cameras (and projectors) are matched 100% identical, they are bound to have unmatched menu settings. It is also probable that the software/ firmware of the cameras would be running different versions of their OS and hence could be in variance to one another. It has to be ascertained that all OS and apps. in the dual systems are identical. Settings (shutter, LUT, etc.) should be reverted to default mode and then programmed afresh - identically. Any external devices (like a blackburst generator) if connected, it has to have dual outputs running into both cameras. If not, then two identical devices of identical settings are to be used.

One other suggestion I had made was to use high frame rate﹣ for photography as well as for projection. Historically speaking, for cinema, when it was first devised at the beginning of the 20th century, the image capturing rate of 24 frames-per-second happened to fall short for human persistence of vision. An image flicker is just about getting avoided at 24fps. Hence, I suggested 60 frames per second. Because, 60fps is where 24fps, 25fps and 30fps non-drop would agree with each other. (Not that it matters much these days). But the important point was, Intamin and Showscan﹣ firms who provided special venue presentations at amusement centers and fairgrounds﹣ shot and projected their 65mm 2D films at 60 frames per second ... providing distinct and sharp images. Utilizing high frame rate and high resolution for 3D would give extraordinary results.

In 2004 we did shoot at 60 fps with Panasonic Varicam. Ideally, still higher frame rates have to be available (as in Phantom cameras) so that slow-motion cinematography is possible.

Pair of stacked Images falling on the sensor chip.

Since the chip area is less than that of the 35mm full aperture film gate area, the pair of images gets cut asymmetrically.

For any 2D lens, this cropping would be of no serious consequence. For, when you are shooting 2D, you frame for the image area that is cast on the sensor.

Image areas are lost at the bottom of the right-eye image and at the top of the left-eye image... i.e; asymmetrically.

Senthil, citing the low refreshment rate of the optical chip, was not keen on high frame rates. But come NAB 2014, he did agree that the filmmaking trend was changing to high frame rate image capture. RealImage themselves have started promoting it.

On my suggestion of how to achieve large image capture with beamsplitters, Balaji suggested the same technique to make HDR (high dynamic range imaging done in still photography) also be made possible in cinematography. He was badgering Jayendra to do the film 180 (2011) in HDR. I suppose unavailability of beamsplitter rigs deterred the attempt.

Given below is a Digital Vs. Film interocular comparison.

On the topic of our past attempts on 3D and Large Format Imaging, one more point I should be mentioning here. Alan Bartly - the tech wizard of Kodambakkom cinema, did attempt to modify one of our Stereovision lenses to shoot Digital. And it was he who said that its optical quality won't stand up to the stark reality of Digital.

In 2010, Alan tried to array digital sensors for making the concept of LIBSSM work. But those days we never had many such spare sensors around. Hence we gave up. Today, after searching the web for sensor chip arraying, I am surprised that till now no one has considered this idea. The display technology is now at the threshold of huge flat projection screens. And that is where large image fields would be necessary.

Meanwhile, ...

References

Manual of Stereoscopic Cinematography

by John A Rupkalvis and Christopher J Condon

Stereovision, Burbank. 1985.

Foundations of the Stereoscopic Cinema: A Study in Depth

by Lenny Lipton

Van Nostrand Reinhold, 1982 ISBN 0442247249, 9780442247249

Despite Senthil & Jayendra's enthusiasm to try Stereovision optics for Digital 3D imaging, I demurred on two points.

ONE - In year 2004, the number of pixels even on the best of digital sensors bestowed much less image resolution than that of a 16mm film frame!

TWO - Even by year 2008, the sensor of the digital cinema camera was small that it would not cover the image circle of 35mm Stereovision lenses. (In fact, Jainul - our self-taught 3D wizkid and Balaji - our Digital Cinema guru, tested our patience by trying to adapt the Stereovision PL lens mount to lock with the digital camera … only to realize that a RED digital camera sensor, as claimed in 2008, was not as big as the 35mm film size (24.89 X 18.67). No wonder, the pair of stereo images got cropped ﹣asymmetrically!

In 2004, Senthil and his Joint Director at RealImage - Jayendra, were keen on putting Chris Condon's lenses on a digital camera so as to follow the Stereovision architecture -- meaning, locked left eye/right eye frames, all the way from camera-shoot to theater-exhibition.

A schematic representation

of shooting and monitoring in 3D Digital (2004).

Down Converters

HD to SDTV

720p, 60fps (genlocked, progressive) dual HD SDI output

red denotes right eye stream. blue left eye stream.

Uncompressed

left, right streams onto two systems

Beamsplitter

Right Camera

Left Camera

HD aspect ratio Silver Screen

Dual Projectors Stereo aligned

left eye beam -45deg polorised

right eye beam +45deg polrised

for compositing left/right images on the convergence chart

Stereographer's SD Monitor

for setting convergence with 3D reticle

illustration by

Narayana Moorthy Kishkinta.

A Stereovision 3D lens has dual optics within

(as in a pair of human eyes - 2.5 inches apart).

With prisms, the two images (one for the right eye,

one for the left) are stacked one above the other,

and cast onto the 35mm film gate.

And also,

NEWS “To fend off Netflix, movie theaters try 3-screen immersion”

In this photo taken on Thursday, Aug. 9, 2018, a trailer shows a car speeding through traffic as part of a demonstration for ScreenX at Cineworld in London. Sit at the back of the movie theater, and it's possible to see the appeal of ScreenX, the latest attempt to drag film lovers off the sofa and away from Netflix. Instead of one screen, there are three, creating a 270-degree view meant to add to the immersive experience you can’t get from the home TV. (AP Photo/Robert Stevens)

Hence all the more, my case for Large Imaging with BeamSplitter Sensor Matrices.

JIJO. 2 August 2018.

CONCLUDED.